The Rise of Small Language Models:

Unlocking Personalized AI Companions

Imagine a world where your AI assistant isn’t just a generic chatbot spitting out canned responses—it’s a digital sidekick that knows you. It anticipates your needs, remembers your quirks, and adapts on the fly without guzzling massive amounts of energy or invading your privacy. Sounds like sci-fi? Well, buckle up, because the combination of Small Language Models (SLMs), their personalized cousins known as Personal Language Models (PLMs), and Large Language Models (LLMs) is making this a reality.

In this blog, we’ll dive into the perks of SLMs and PLMs over their beefier LLM counterparts, explore how blending them could revolutionize personalized AI and companion bots, and peek at the tech making it all trustworthy and efficient. Think of it as the ultimate AI tag-team: the heavyweight champ (LLMs) providing deep insights, while the nimble contenders (SLMs and PLMs) deliver speed and hyper-personalization. We’ll break down the differences, tackle issues like hallucinations, and spotlight how BurstIQ’s LifeGraph® bridges the gaps. By the end, you’ll see why this hybrid approach, especially with PLMs at the core, isn’t just a trend—it’s the breakthrough for making AI feel truly human.

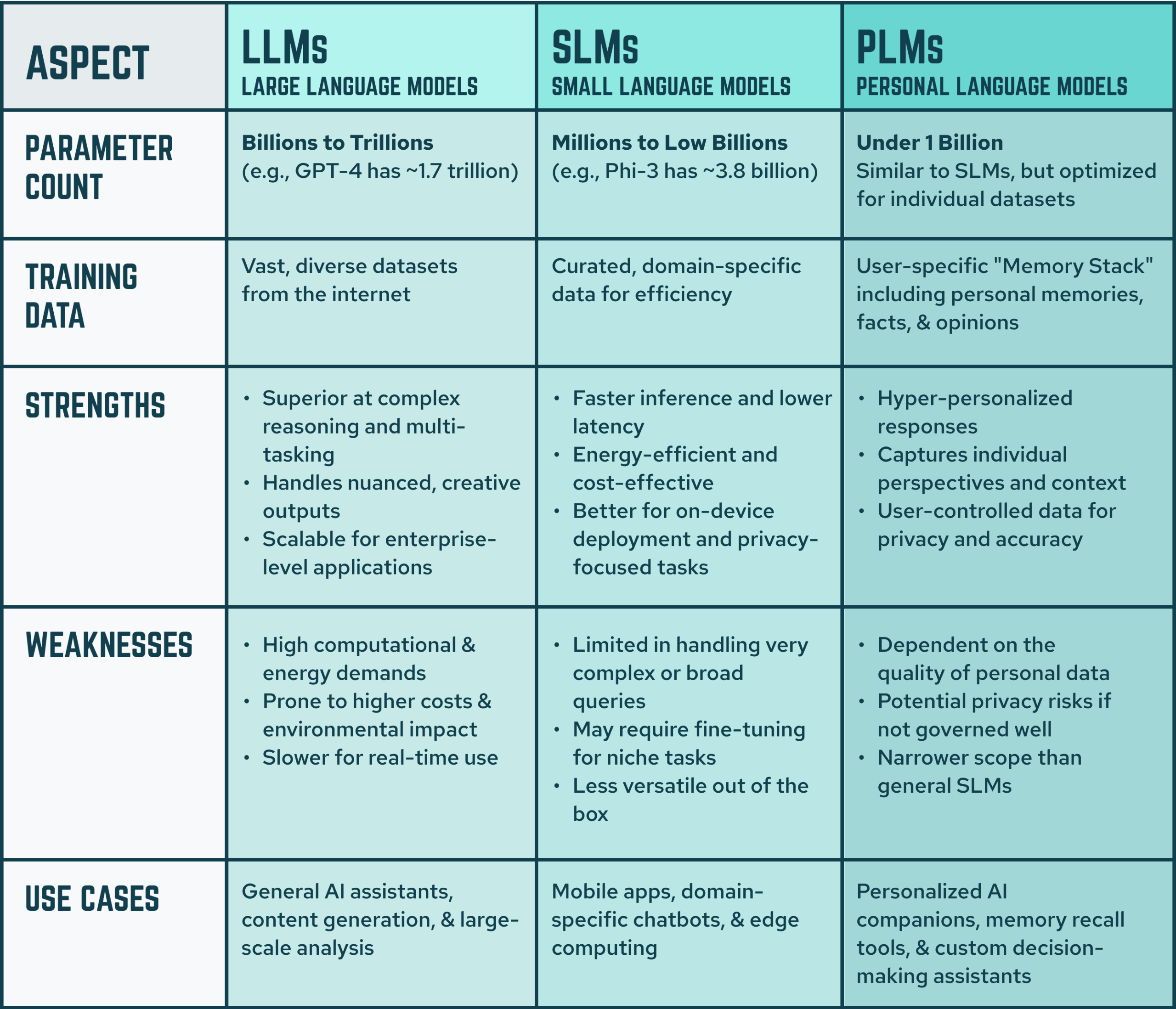

Defining & Contrasting LLMs, SLMs, & PLMs: Size, Scope, & Personal Touch

Large Language Models (LLMs) like ChatGPT, Grok, Gemini, or Llama are the rockstars of AI, massive neural networks trained on billions of parameters and vast datasets to handle everything from writing poetry to coding apps. They’re versatile powerhouses, excelling at complex reasoning and generating human-like text across a wide array of tasks. Small Language Models (SLMs) are their compact counterparts, with fewer parameters (often under a billion) and trained on more specialized content, making them lighter, faster, and more efficient for specific, targeted jobs.

Taking it a step further, Personal Language Models (PLMs) are a specialized form of SLMs, fine-tuned or grounded in an individual user’s unique data, memories, facts, and opinions. Unlike general SLMs, which might be domain-specific (e.g., medical or legal), PLMs are hyper-personalized, learning from a user’s “Memory Stack” to reflect their personal perspectives and context. They’re often built on architectures similar to LLMs/SLMs but scaled down and “grounded” rather than pretrained on public data, using technologies like Generative Grounded Transformers (GGT). This makes PLMs ideal for creating AI companions that feel like extensions of yourself.

The key differences boil down to scale, training, and application. LLMs thrive on enormous datasets and computational resources, allowing them to tackle broad, intricate problems but at the cost of high energy use and slower response times. SLMs are distilled from larger models or trained on curated, domain-specific data, prioritizing efficiency and deployability on edge devices, such as smartphones. PLMs build on this by incorporating personal data silos, enabling true customization while maintaining the efficiency advantages of SLMs.

To visualize this showdown, here’s a handy table comparing their strengths and weaknesses:

Large Language Models (LLMs) like ChatGPT, Grok, Gemini, or Llama are the rockstars of AI, massive neural networks trained on billions of parameters and vast datasets to handle everything from writing poetry to coding apps. They’re versatile powerhouses, excelling at complex reasoning and generating human-like text across a wide array of tasks. Small Language Models (SLMs) are their compact counterparts, with fewer parameters (often under a billion) and trained on more specialized content, making them lighter, faster, and more efficient for specific, targeted jobs.

Taking it a step further, Personal Language Models (PLMs) are a specialized form of SLMs, fine-tuned or grounded in an individual user’s unique data, memories, facts, and opinions. Unlike general SLMs, which might be domain-specific (e.g., medical or legal), PLMs are hyper-personalized, learning from a user’s “Memory Stack” to reflect their personal perspectives and context. They’re often built on architectures similar to LLMs/SLMs but scaled down and “grounded” rather than pretrained on public data, using technologies like Generative Grounded Transformers (GGT). This makes PLMs ideal for creating AI companions that feel like extensions of yourself.

The key differences boil down to scale, training, and application. LLMs thrive on enormous datasets and computational resources, allowing them to tackle broad, intricate problems but at the cost of high energy use and slower response times. SLMs are distilled from larger models or trained on curated, domain-specific data, prioritizing efficiency and deployability on edge devices, such as smartphones. PLMs build on this by incorporating personal data silos, enabling true customization while maintaining the efficiency advantages of SLMs.

Here’s a handy comparison of their strengths and weaknesses:

PARAMETER COUNT:

Large Language Models (LLMs):

Billions to Trillions (e.g., GPT-4 has ~1.7 trillion)

Small Language Models (SLMs):

Millions to Low Billions (e.g., Phi-3 has ~3.8 billion)

Personal Language Models (PLMs):

Millions to Low Billions (e.g., Phi-3 has ~3.8 billion)

TRAINING DATA:

Large Language Models (LLMs):

Vast, diverse datasets from the internet

Small Language Models (SLMs):

Curated, domain-specific data for efficiency

Personal Language Models (PLMs):

User-specific “Memory Stack” including personal memories, facts, and opinions

STRENGTHS:

Large Language Models (LLMs):

- Superior at complex reasoning and multi-tasking

- Handles nuanced, creative outputs

- Scalable for enterprise-level applications

Small Language Models (SLMs):

- Faster inference and lower latency

- Energy-efficient and cost-effective

- Better for on-device deployment and privacy-focused tasks

Personal Language Models (PLMs):

- Hyper-personalized responses

- Captures individual perspectives and context

- User-controlled data for privacy and accuracy

WEAKNESSES:

Large Language Models (LLMs):

- High computational and energy demands

- Prone to higher costs and environmental impact

- Slower for real-time use

Small Language Models (SLMs):

- Limited in handling very complex or broad queries

- May require fine-tuning for niche tasks

- Less versatile out of the box

Personal Language Models (PLMs):

- Dependent on the quality of personal data

- Potential privacy risks if not governed well

- Narrower scope than general SLMs

USE CASES:

Large Language Models (LLMs):

General AI assistants, content generation, and large-scale analysis

Small Language Models (SLMs):

Mobile apps, domain-specific chatbots, and edge computing

Personal Language Models (PLMs):

Personalized AI companions, memory recall tools, and custom decision-making assistants

As you can see, LLMs are like a Swiss Army knife—versatile but bulky—and SLMs are a precision tool, sharp and portable. PLMs add a custom engraving to make it uniquely yours. The real magic happens when you combine them, with PLMs bridging the personal gap.

Underlying Technology & Governance: Building Reliable AI Foundations

To make LLMs, SLMs, and especially PLMs more reliable, we need robust tech stacks that handle data efficiently and ensure governance. Enter vector databases that enable Retrieval-Augmented Generation (RAG), and its evolved cousin, GraphRAG. These are the unsung heroes turning raw data into actionable insights, particularly for grounding PLMs in personal contexts.

Vector databases store data as high-dimensional, high-precision float vectors, enabling fast similarity searches for tasks like semantic querying. They’re crucial for AI because they allow models to retrieve relevant information quickly without scanning entire datasets, boosting efficiency in LLMs, SLMs, and PLMs alike.

RAG enhances this by combining retrieval from external sources (such as vector databases) with generation, pulling in real-time data to ground responses and reduce errors. It’s like giving your AI a cheat sheet before it answers—essential for PLMs to integrate personal memories with broader knowledge.

GraphRAG takes it up a notch by incorporating knowledge graphs, structured networks of entities and relationships, into the retrieval process. This allows for multi-hop reasoning, where the AI connects dots across related concepts for deeper insights, which is perfect for PLMs to weave personal data into complex queries.

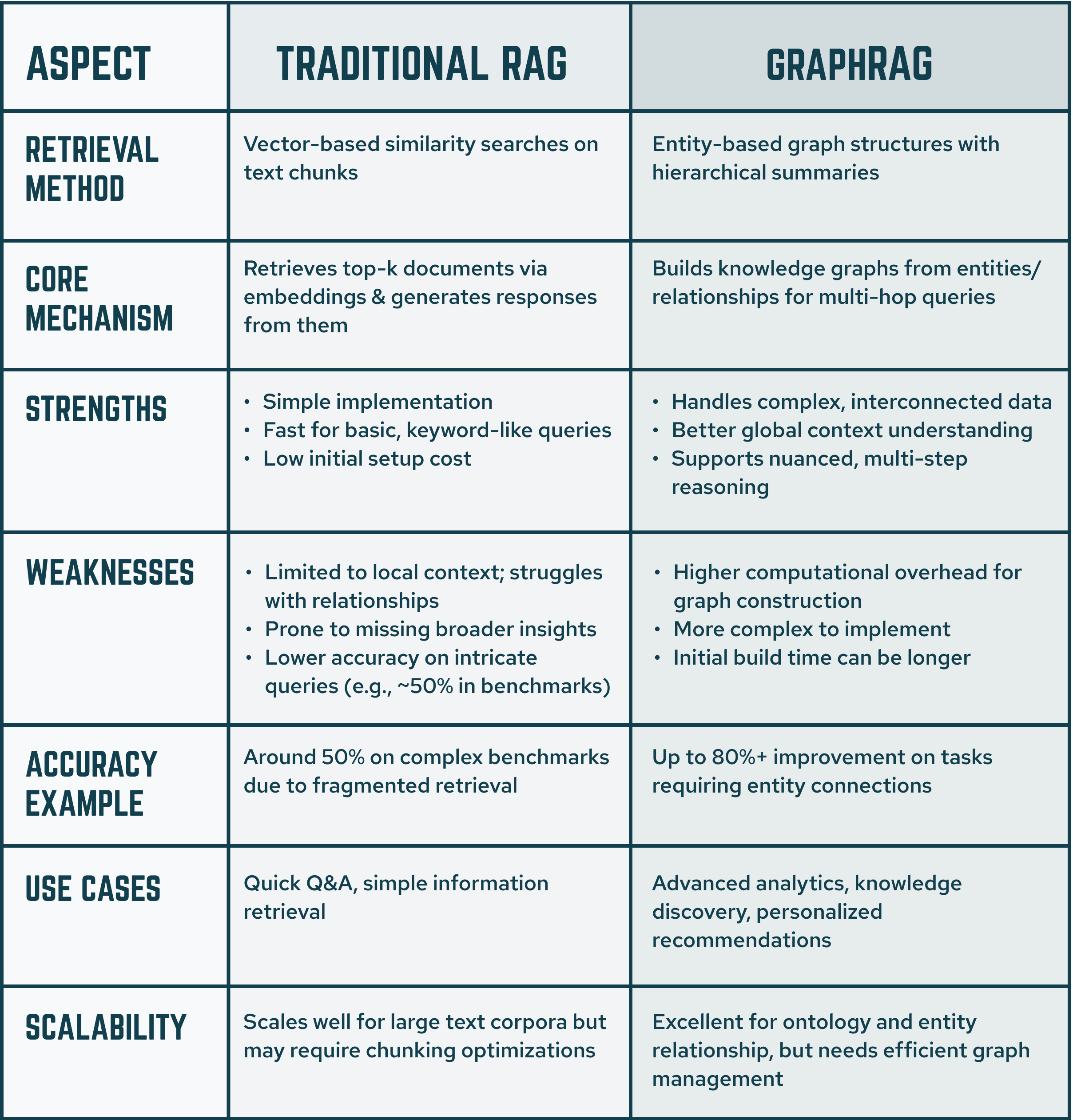

To better illustrate the differences, here’s a detailed table comparing Traditional RAG and GraphRAG:

RETRIEVAL METHOD:

Traditional RAG:

Vector-based similarity searches on text chunks

GraphRAG:

Entity-based graph structures with hierarchical summaries

CORE MECHANISM:

Traditional RAG:

Retrieves top-k documents via embeddings and generates responses from them

GraphRAG:

Builds knowledge graphs from entities/relationships for multi-hop queries

STRENGTHS:

Traditional RAG:

- Simple implementation

- Fast for basic, keyword-like queries

- Low initial setup cost

GraphRAG:

- Handles complex, interconnected data

- Better global context understanding

- Supports nuanced, multi-step reasoning

WEAKNESSES:

Traditional RAG:

- Limited to local context; struggles with relationships

- Prone to missing broader insights

- Lower accuracy on intricate queries (e.g., ~50% in benchmarks)

GraphRAG:

- Higher computational overhead for graph construction

- More complex to implement

- Initial build time can be longer

ACCURACY EXAMPLE:

Traditional RAG:

Around 50% on complex benchmarks due to fragmented retrieval

GraphRAG:

Up to 80%+ improvement on tasks requiring entity connections

USE CASES:

Traditional RAG:

Quick Q&A, simple information retrieval

GraphRAG:

Advanced analytics, knowledge discovery, personalized recommendations

SCALABILITY:

Traditional RAG:

Scales well for large text corpora but may require chunking optimizations

GraphRAG:

Excellent for ontology and entity relationship, but needs efficient graph management.

This comparison highlights how GraphRAG builds on traditional RAG to address its limitations, making it a game-changer for more sophisticated AI applications, including personalizing PLMs.

Governance ties it all together with provenance and rules for data quality, privacy, and ethics, ensuring these systems—especially PLMs with sensitive personal data—don’t run amok. Transparent audits and compliance (e.g., GDPR) are essential for trust.

Tackling Hallucinations & False Conclusions: The Data Management Imperative

Ah, hallucinations—the AI equivalent of confidently stating the sky is green. These occur when models generate plausible but incorrect information due to flawed training data, overfitting, or lack of context. For PLMs, which rely on personal data, this risk is amplified if the Memory Stack is incomplete or biased. To eliminate them, we need broader data management, including high-quality, diverse datasets, rigorous evaluation, and a trusted, transparent ecosystem.

BurstIQ’s approach shines here. LifeGraph technology transforms fragmented data into smart data contextual knowledge graphs, ensuring clean, connected data with blockchain-backed audit trails for transparency. This privacy-first ecosystem uses dynamic consent and governance to create a reliable “source of truth,” minimizing risks like hallucinations by feeding AI— including PLMs—verifiable, high-integrity personal data. It’s like building AI on a rock-solid foundation rather than quicksand.

Integral to this solution is the concept of data provenance, which tracks the origin, history, and transformations of data used by AI systems. Provenance ensures that every piece of information fed into LLMs, SLMs, or PLMs can be traced back to its source, verifying its authenticity and integrity. By leveraging LifeGraph’s blockchain-based audit trails, BurstIQ embeds provenance into the data layer, enabling AI to distinguish between reliable and questionable inputs. This transparency not only reduces hallucinations by grounding responses in verified data but also builds user trust, particularly for PLMs where personal data accuracy is critical.

Mind the Memory Gap: Overcoming AI’s Retention Challenges

Beyond hallucinations, another critical hurdle for AI, particularly in personalized and companion systems, is the “memory gap”, a limitation stemming from the finite context windows in models like LLMs. AI excels at processing information in the moment but struggles with long-term retention. The context window acts as a short-term memory barrier, causing AI to “forget” previous interactions or user-specific details over time, which diminishes its utility for ongoing, contextual conversations. This gap is especially problematic for companion AIs and PLMs, where consistent recall of personal history, preferences, and evolving contexts is essential for building trust and delivering value. Without addressing this, AI remains reactive rather than proactive, limiting its role as a true personal assistant.

BurstIQ’s LifeGraph offers a compelling solution to this memory gap by enabling secure, portable knowledge graphs that can be stored locally. This local storage ensures privacy and offline accessibility, while allowing the LifeGraph to “plug in” seamlessly to AI models like SLMs, PLMs, or LLMs. By providing on-demand context and personalization through structured, user-owned data, LifeGraph extends the effective memory beyond the model’s inherent limits, without requiring massive context windows or constant cloud dependency. This approach not only enhances retention but also empowers users to control their data, making AI companions more reliable and tailored to individual lives.

Combining SLMs, PLMs, & LLMs: Forging a Smarter Agentic Ecosystem

Why choose when you can have it all? Combining SLMs (and their personalized PLM variants) with LLMs creates an agentic AI ecosystem—autonomous agents that plan, reason, and act collaboratively. This hybrid setup scales beautifully: Imagine an AI companion where an LLM orchestrates the big picture (e.g., trip planning), SLMs handle domain specifics, and a PLM fine-tunes details based on your personal history (e.g., past travel preferences stored in your Memory Stack). It’s modular, cost-effective, and unlocks new revenue streams by accelerating AI adoption. The result? A broader, more intelligent network of agents that feel like true companions.

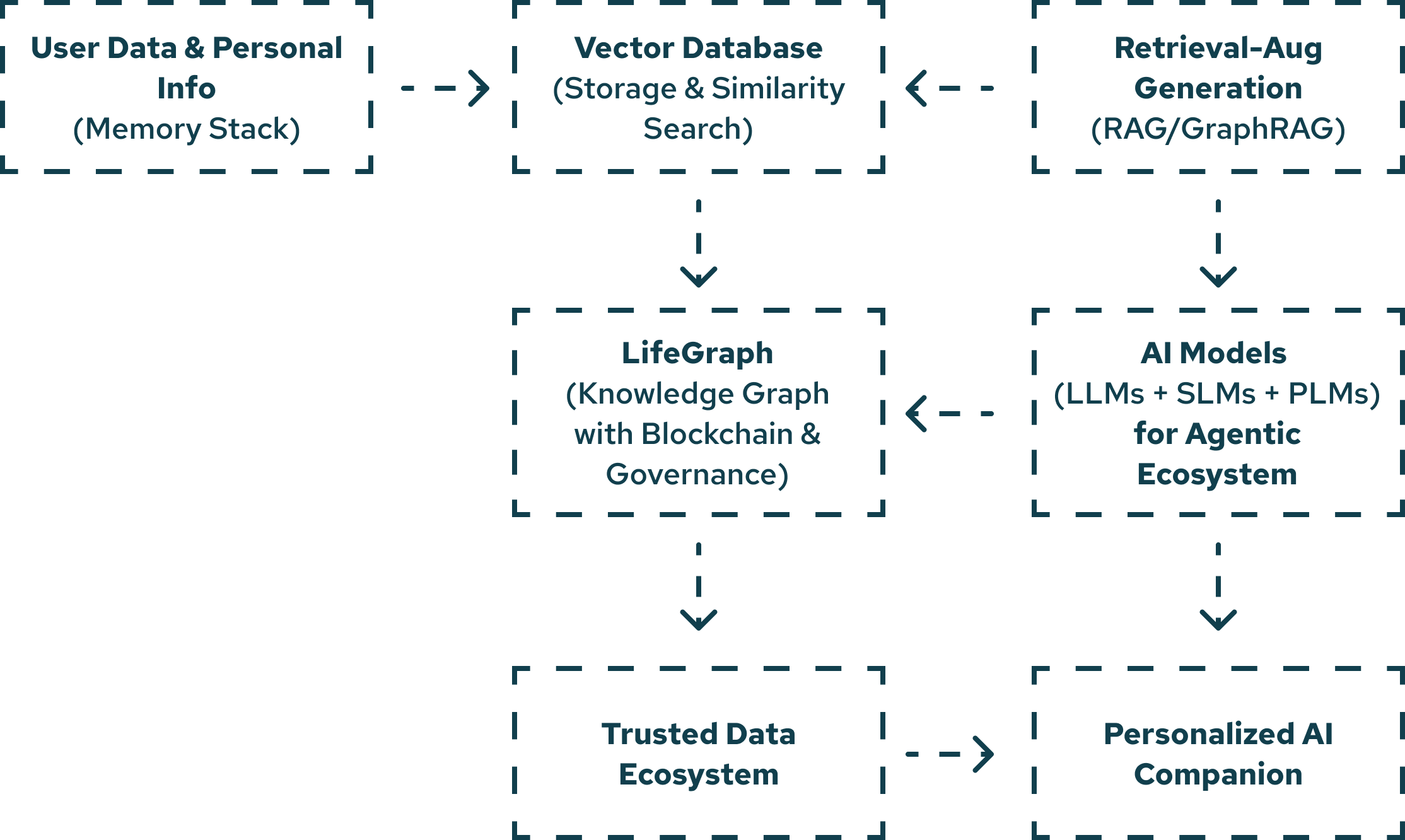

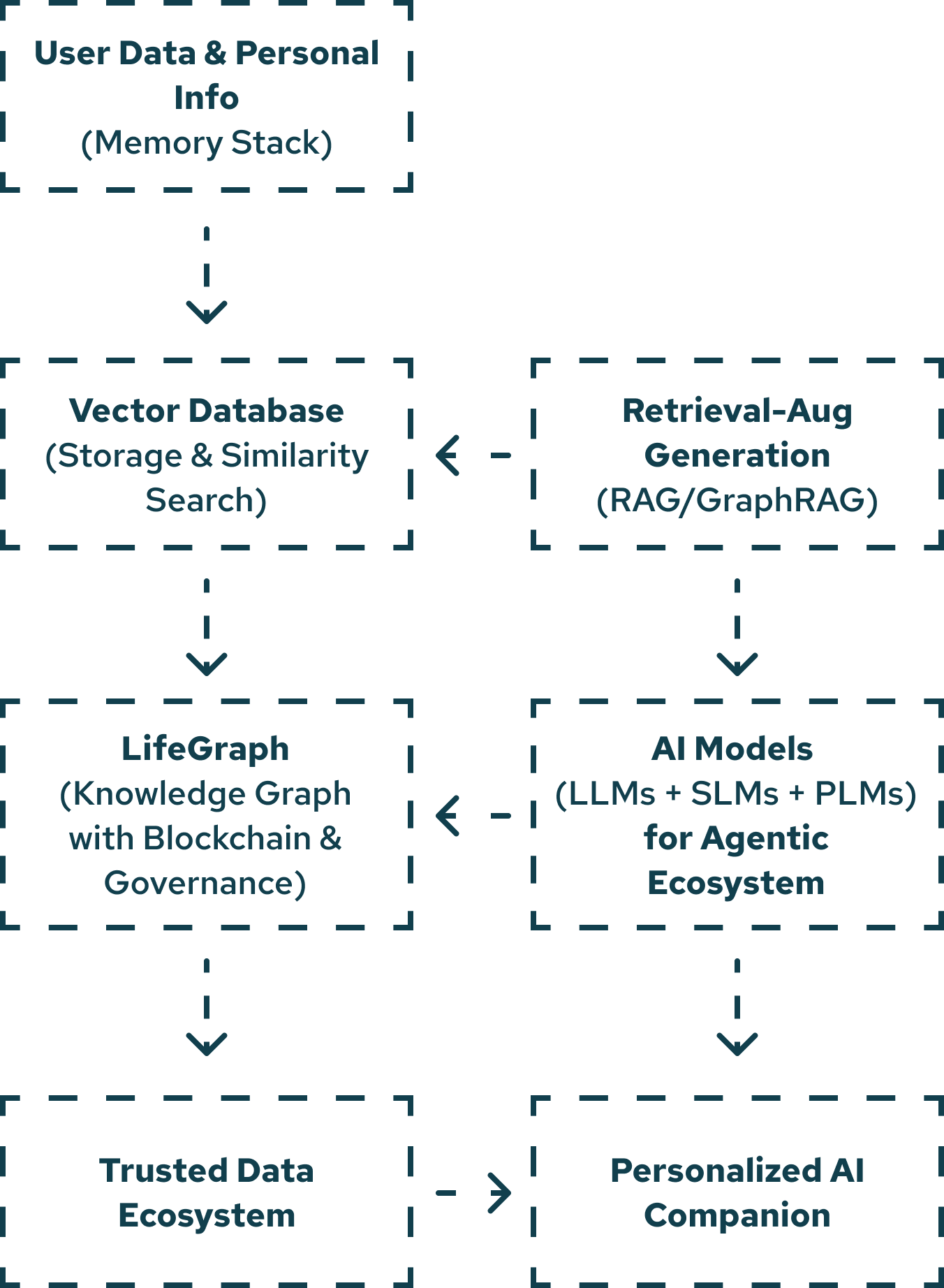

To visualize how everything fits together, here’s a conceptual diagram showing the integrated ecosystem:

In this flow, user data (including personal Memory Stacks for PLMs) feeds into vector databases for efficient storage. RAG or GraphRAG retrieves and augments information, enhanced by LifeGraph’s structured, secure knowledge graphs. This powers a hybrid LLM/SLM/PLM setup, creating a reliable, personalized AI companion.

Personalizing Larger Models with BurstIQ’s LifeGraph

BurstIQ’s LifeGraph takes personalization to the next level by turning data into “Smart Data Objects“—self-contained, context-rich assets that integrate seamlessly with AI, making it easier to build and maintain PLMs. For larger models, it provides unified 360-degree knowledge graphs, allowing LLMs to access personalized insights without retraining on massive datasets—essentially enabling PLM-like functionality on top of LLMs. This reduces processing by focusing on relevant deduplicated data and slashes storage needs through efficient integration of LifeGraph data ecosystems. The blockchain layer ensures privacy, making it perfect for companion AIs that evolve with your life data securely, grounding PLMs in a user-controlled consent.

Conclusion: Bridging Gaps for Next-Level AI

The AI market is booming, but gaps persist: high costs, privacy and regulatory requirements, hallucinations, and inefficient scaling hinder true personalization. We need ecosystems that prioritize trusted data layers, modular architectures, and governance to achieve agentic, companion-level AI, with PLMs leading the charge for individual empowerment.

LifeGraph from BurstIQ addresses many of these by offering scalable, privacy-preserving knowledge graphs that accelerate AI adoption by 4x, automate processes, and empower secure innovation—particularly for building and integrating PLMs. It solves data silos, ensures transparency, and optimizes resources—paving the way for a future where AI isn’t just smart, it’s yours. As SLMs, PLMs, and LLMs team up, tools like this could be the key to making personalized AI ubiquitous.

What’s your take? Are you ready for your own AI sidekick? Let’s connect to discuss your vision for truly personalized AI.