From Basics to Advanced Graph Innovations:

Unlocking the Power of Retrieval Augmented Generation (RAG)

In the rapidly evolving world of artificial intelligence, Retrieval Augmented Generation (RAG) has emerged as a game-changing technique that bridges the gap between large language models (LLMs) and real-world knowledge.

What is Retrieval Augmented Generation (RAG)?

RAG is an AI framework that enhances the capabilities of generative models by leveraging external knowledge retrieval. Fundamentally, RAG merges two main processes: retrieval, where relevant information is fetched from a knowledge base or database, and generation, where an LLM uses that retrieved data to create accurate, contextually rich responses. Unlike traditional LLMs that depend solely on their pre-trained parameters, RAG enables models to reference authoritative external sources, such as documents, databases, or web content, to base their outputs on factual data.

The process typically works like this:

- A user query is processed to identify key elements.

- Relevant documents or data chunks are retrieved using similarity search techniques.

- The LLM then generates a response augmented with the retrieved information, reducing the risk of generating incorrect or outdated content.

RAG was popularized in research papers and has since become a staple in applications like chatbots, question-answering systems, and content generation tools, where accuracy and up-to-date information are paramount.

Why is RAG Important to AI?

RAG addresses several critical limitations of standalone LLMs, making it indispensable for building reliable AI systems. One of the primary challenges with LLMs is “hallucination”, generating plausible but factually incorrect information due to gaps in training data. RAG mitigates this by allowing models to pull in real-time or domain-specific knowledge, ensuring responses are grounded in verifiable sources.

Key important factors include:

- Access to Current Information: LLMs are trained on static datasets, which are often outdated. RAG enables integration with live data sources, such as news feeds or social media, keeping AI responses relevant.

- Improved Accuracy and Reliability: By citing external sources, RAG boosts trust in AI outputs, which is crucial for enterprise applications.

- Cost-Efficiency: RAG enables fine-tuning of expensive LLMs through retrieval, making AI scaling more affordable.

- Expanded Use Cases: RAG enables AI to handle complex queries involving proprietary or sensitive data, reducing risks and enhancing user satisfaction.

In essence, RAG transforms AI from a “know-it-all” model prone to errors into a collaborative system that leverages external expertise, fostering more ethical and effective AI deployments.

Current Techniques to Implement RAG Models

Implementing RAG involves a pipeline that combines data preparation, retrieval, and generation.

Here’s a breakdown of common techniques:

- DATA PREPARATION & INDEXING:

- Chunking: Break documents into smaller, manageable pieces (e.g., paragraphs or sentences) to improve retrieval precision.

- Embedding: Convert chunks into vector representations using models like Bidirectional Encoder Representations from Transformers (BERT) or Sentence Transformers. These embeddings capture semantic meaning for similarity searches.

- STORAGE & RETRIEVAL:

- Use vector databases to store embeddings for fast approximate nearest neighbor (ANN) searches.

- For queries, embed the input and retrieve the top-k relevant chunks based on cosine similarity or other metrics.

- GENERATION & AUGMENTATION:

- Feed retrieved chunks into an LLM along with the query to generate responses.

- Advanced methods include reranking retrieved results or using chain-of-thought prompting to refine outputs.

- OPTIMIZATION BEST PRACTICES:

- Hybrid search combining keyword and semantic methods.

- Fine-tuning embeddings for domain-specific data.

- Monitoring for hallucinations with techniques like self-consistency checks.

- Reranking algorithms that are applied post-query.

Explaining Graph Databases & Knowledge Graphs

To appreciate advanced RAG variants, it’s essential to understand graph technologies.

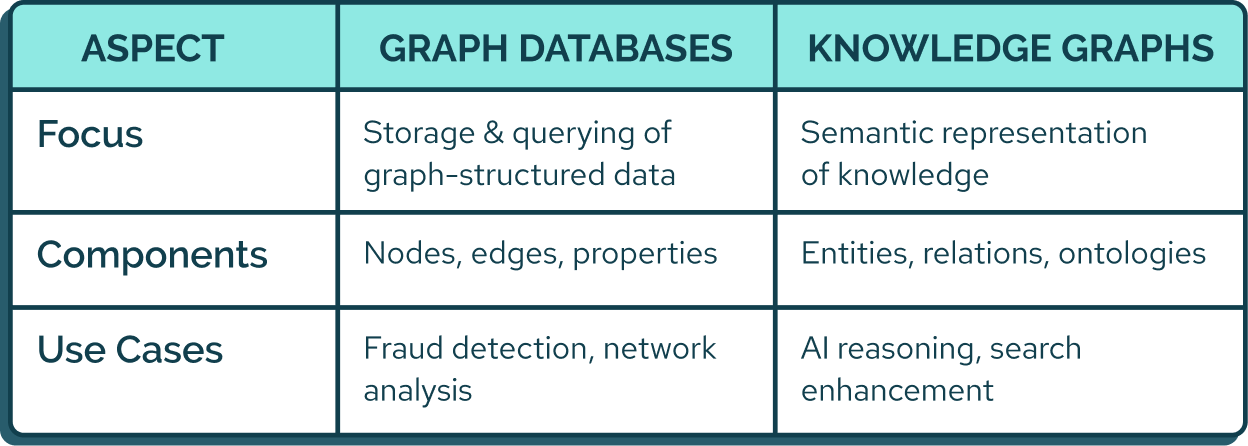

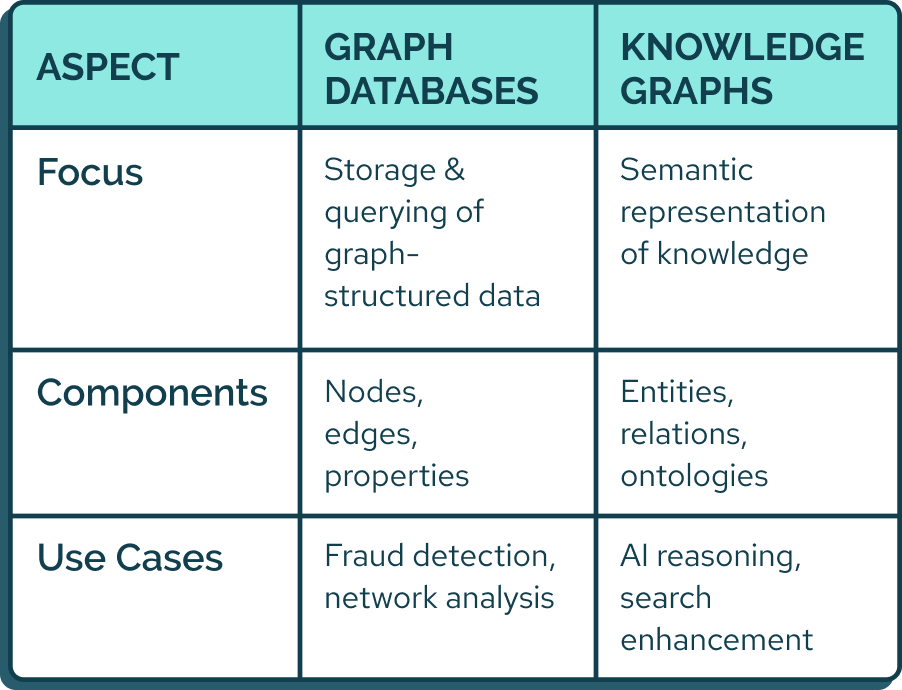

Graph Databases: These are specialized databases that store data as nodes (entities), edges (relationships), and properties (attributes). Unlike relational databases with tables, graph databases excel at querying interconnected data, making them ideal for scenarios involving complex relationships, such as social networks or recommendation systems. They support efficient traversals, like finding shortest paths or community detection.

Knowledge Graphs: A knowledge graph is a structured representation of real-world knowledge, built as a network of entities (nodes) and their relationships (edges), often enriched with semantics like ontologies. For instance, in a medical knowledge graph, “Aspirin” (node) might connect to “treats” (edge) “Headache” (node). Knowledge graphs organize data for inference and discovery, enabling AI to reason over facts rather than just patterns. They are often stored in graph databases, emphasizing semantic meaning and interoperability.

Read more: Knowledge Graph Blog, Knowledge Graph Core Capability

Benefits of GraphRAG vs. Traditional Approach to RAG

Traditional RAG relies on vector embeddings and similarity search, which is effective for unstructured text but struggles with relational data. GraphRAG integrates knowledge graphs for retrieval, offering superior handling of connections and context.

Benefits of GraphRAG:

- Better Relational Reasoning: Graphs capture entity relationships, enabling complex queries like “What companies are connected to this investor through funding rounds?”, something vector search might miss.

- Improved Accuracy for Multi-Faceted Questions: It decomposes queries into sub-queries over graphs, providing more comprehensive answers than flat vector retrieval.

- Enhanced Contextual Relevance: By traversing relationships, GraphRAG uncovers hidden insights, leading to richer, less hallucinated responses.

- Hybrid Potential: Combining graph and vector methods yields the best of both worlds, semantic search with structural depth.

Traditional vector RAG is simpler and has lower entry barriers. However, for domains with intricate relationships, GraphRAG outperforms by reducing noise and improving precision.

BurstIQ LifeGraph:

Next-Gen Security and Graph Technology Powering RAG Innovation

BurstIQ’s LifeGraph® platform is a breakthrough for graph-based RAG. By combining knowledge graphs with blockchain and privacy-enhancing data (PEDs), plus LifeGraph Smart Data Objects (SDOs), it creates a secure, decentralized data ecosystem—perfect for sensitive AI applications.

LifeGraph organizes data as interconnected knowledge graphs, centered around individuals or entities, rather than silos. Its extended capabilities include:

- Graph Features: Knowledge graphs enable real-time, interconnected intelligence, uncovering relationships while respecting ownership and governance.

- Security Enhancements: Blockchain ensures immutability and auditability, with decentralized storage and cryptographic ownership preventing tampering. PEDs like encryption, anonymization, and granular consent mechanisms protect privacy.

- Native Vector Database Capabilities: LifeGraph supports native vector searches, facilitating seamless hybrid retrieval. This allows for efficient storage and querying of high-dimensional embeddings alongside graph structures, supporting advanced semantic search and similarity matching directly within the platform.

For RAG, LifeGraph is a breakthrough because it provides an AI-ready, trustworthy knowledge base. Traditional RAG introduces new and significant challenges in data privacy and security, especially with sensitive information like health records. LifeGraph ensures compliant, high-quality data retrieval, reducing risks in AI generation and enabling secure multi-party collaborations. The addition of native vector capabilities further elevates this by facilitating hybrid GraphRAG systems, where vector-based semantic retrieval complements graph-based relational and semantic reasoning. This results in more precise, context-aware augmentations, particularly useful for complex queries in domains like healthcare, where understanding both relationships and similarities in data (e.g., patient symptoms and medical literature) is critical.

Why is this important? In an era of increasing AI adoption, trust is paramount. LifeGraph empowers secure data sharing, incentivizes participation, and fuels more accurate AI insights by maintaining data integrity and transparency. With native vector support, it addresses scalability challenges in traditional graph systems, allowing for faster processing of large-scale unstructured data while preserving security and privacy. This hybrid approach not only minimizes hallucinations in generated outputs but also opens doors to innovative applications, such as personalized medicine or fraud detection, making LifeGraph a cornerstone for responsible, high-performance RAG deployments. It shifts RAG from a mere augmentation tool to a secure, ethical framework, paving the way for responsible AI innovation.

Pioneering the Future of AI with LifeGraph & RAG

LifeGraph delivers a uniquely powerful AI infrastructure for Retrieval-Augmented Generation (RAG), supporting a full spectrum of use cases—from standard vector search to advanced knowledge graph reasoning—while embedding blockchain-grade security and data governance at every layer.

Flexible Vector Storage in Secure Data Objects (SDOs):

LifeGraph enables you to tokenize and embed your content, storing the resulting vectors within Secure Data Objects (SDOs). This approach mirrors the functionality of leading vector databases, but with the added benefits of LifeGraph’s robust data governance. You can leverage built-in vector search queries to fine-tune proximity and accuracy, ensuring highly relevant retrievals for your AI applications. Unlike traditional vector databases, every SDO in LifeGraph carries its own security, consent, and audit policies, providing granular control and compliance by design.

Hybrid RAG: Combining Vector Search with Graph Traversal:

LifeGraph excels at hybrid RAG architectures, where both unstructured (or structured) data can be vectorized and stored as vertices within a knowledge graph. This allows you to run graph traversal queries alongside vector searches, dramatically enhancing the contextual accuracy and relevance of retrieved information. By uniting semantic similarity with relational context, LifeGraph enables your AI to pinpoint not just the right data, but the right relationships and pathways within your entire data ecosystem.

End-to-End Security, Compliance, & Data Lineage:

All RAG features in LifeGraph are natively integrated with SDO technology, ensuring that every piece of data—whether stored as a vector, a graph node, or an entity—retains full ownership, consent, immutability, audit trails, and lineage tracking. This is a significant leap beyond traditional open vector databases, which often struggle with security, compliance, and data governance.

From Standard to Advanced RAG—All in One Platform:

Whether you need simple vector search, hybrid graph-vector retrieval, or advanced knowledge graph reasoning, LifeGraph provides a unified, secure, and future-proof foundation for your augmented LLM needs. With LifeGraph, you don’t have to compromise between AI performance and enterprise-grade data stewardship—the platform is designed to deliver both, at scale.

Conclusion

RAG is revolutionizing AI by making models more accurate and adaptable. As we move toward graph-based implementations, platforms like BurstIQ LifeGraph highlight the future: secure, intelligent data ecosystems that prioritize privacy and trust. Whether you’re building a simple RAG app or tackling complex, regulated domains, understanding these elements will help you harness AI’s full potential. Stay tuned for more on emerging AI trends!