The Hidden Risks of AI:

Exploring Vulnerabilities in

Large Language Models

Exploring Vulnerabilities in Large Language Models

As artificial intelligence (AI) and large language models (LLMs) revolutionize industries worldwide, they bring unprecedented capabilities and new security challenges.

In traditional machine learning (ML) models, data engineering happens during the creation of the model. Training data is used to refine what is usually a single-use model. It takes significant time and specialized engineering skills to create and refine these models.

The emergence of LLMs has opened a whole new level of possibilities. LLMs are generated based on analyzing patterns in large data sets. Deep learning models learn once and then are applied broadly in a reusable, pre-trained manner. Rather than create specific models, behavior is driven by each particular prompt or query. This means the majority of data engineering happens promptly, creating a more responsive system that can operate at or near real-time. Because of this, implementing LLMs requires less engineering sophistication to create high-impact prompts. Highly specific tasks will continue to rely upon manual engineering, but LLMs accelerate processes significantly.

However, the advent of LLMs brings with it a new wave of security vulnerabilities. Like many new technology innovations, security wasn’t necessarily designed as an inherent feature. Vulnerabilities and exploits may not be fully evident until broader adoption occurs.

In this blog, we examine vulnerabilities in AI systems, particularly LLMs, and the potential ways malicious actors might exploit them.

DATA POISONING:

Corrupting the Foundation

AI, ML, and LLM models are only as good as the data they’re trained on. Data poisoning occurs when attackers inject malicious or biased information into training datasets. This can cause models to learn incorrect patterns, leading to skewed outputs or compromised decision-making. For example, in image recognition, subtle alterations to training images could cause misclassification of critical objects. In natural language processing, injecting biased language could produce discriminatory content in the model.

MODEL INVERSION:

Unveiling the Private

Some AI models inadvertently memorize portions of their training data. Through careful querying, attackers can extract sensitive information used during training. This is particularly concerning for models trained on confidential data, such as medical records or financial information.

MEMBERSHIP INFERENCE:

The Digital Fingerprint

Membership inference attacks aim to identify if a specific data point was part of the model’s training set. This can lead to privacy breaches and the de-anonymization of individuals in supposedly anonymous datasets. Attackers observe the model’s behavior and confidence levels when processing specific inputs to determine data presence in the training set.

ADVERSARIAL ATTACKS:

Fooling the System

AI models can be tricked by inputs designed to cause misclassification while appearing normal to human observers. This type of attack can be particularly damaging in systems that rely on accurate data, such as medical diagnosis tools.

PROMPT INJECTION:

Manipulating the Conversation

LLMs are vulnerable to carefully crafted prompts that can bypass safety measures or manipulate the model’s behavior. An attacker might embed hidden instructions within a seemingly innocent query, causing the model to ignore ethical guidelines or reveal sensitive information.

MODEL STEALING:

Intellectual Property at Risk

Model stealing involves reconstructing a proprietary AI model’s functionality by extensively querying it. Attackers can use the output from these models to train a new model (a “knock-off”) that mimics the original with high accuracy.

data leakage:

Unintended Disclosures

LLMs can sometimes memorize sensitive information from their training data. For instance, if a model has been trained on datasets containing personally identifiable information (PII), it may inadvertently reproduce this information in its responses. This can occur when users prompt the model in a way that triggers the recall of this sensitive data.

Uncontrolled Data Absorption:

The Copyright Conundrum

Public AI models, particularly large language models (LLMs), can consume and learn from vast amounts of data available on the data that an organization may use when using these models, including potentially proprietary or copyrighted information. For example, employees at Samsung reportedly used ChatGPT for coding assistance and inadvertently input confidential data, including proprietary source code and internal meeting notes related to hardware development.

Hallucinations:

When AI Invents Reality

AI models, especially language models with limited training, or with prompts that extend beyond foundational model training, can sometimes generate false or misleading information and present it as factual. This “hallucination” phenomenon can spread misinformation, potentially damaging reputations or influencing decision-making processes.

Transfer Learning Attacks:

Inherited Vulnerabilities

Pre-trained models used as a base for transfer learning may carry inherent weaknesses. Exploiting vulnerabilities in widely used base models could affect numerous downstream applications. This vulnerability arises because many AI applications use pre-trained models as a starting point, fine-tuning them for specific tasks. This could lead to widespread security issues or the propagation of harmful biases across multiple AI systems.

Backdoor Attacks:

Hidden Threats

Malicious actors could embed hidden functionalities within AI models. The vulnerability lies in the potential for attackers to insert triggers that activate harmful behaviors when specific inputs are provided, potentially compromising system integrity. These backdoors can be challenging to detect as the model behaves normally under most circumstances. The implications of backdoor attacks can be severe, especially in security-critical applications, as they allow attackers to control the model’s behavior in specific situations.

API & Infrastructure Vulnerabilities:

The Weak Link

The systems and infrastructure hosting AI models may have traditional cybersecurity weaknesses. Exploiting vulnerabilities in APIs, servers, or networks to gain unauthorized access or disrupt AI services is a significant concern. This vulnerability extends beyond the AI model itself to encompass the entire ecosystem in which it operates. For example, SQL injection, cross-site scripting, denial of service attacks, or exploiting misconfigurations in cloud services.

Your first line of defense:

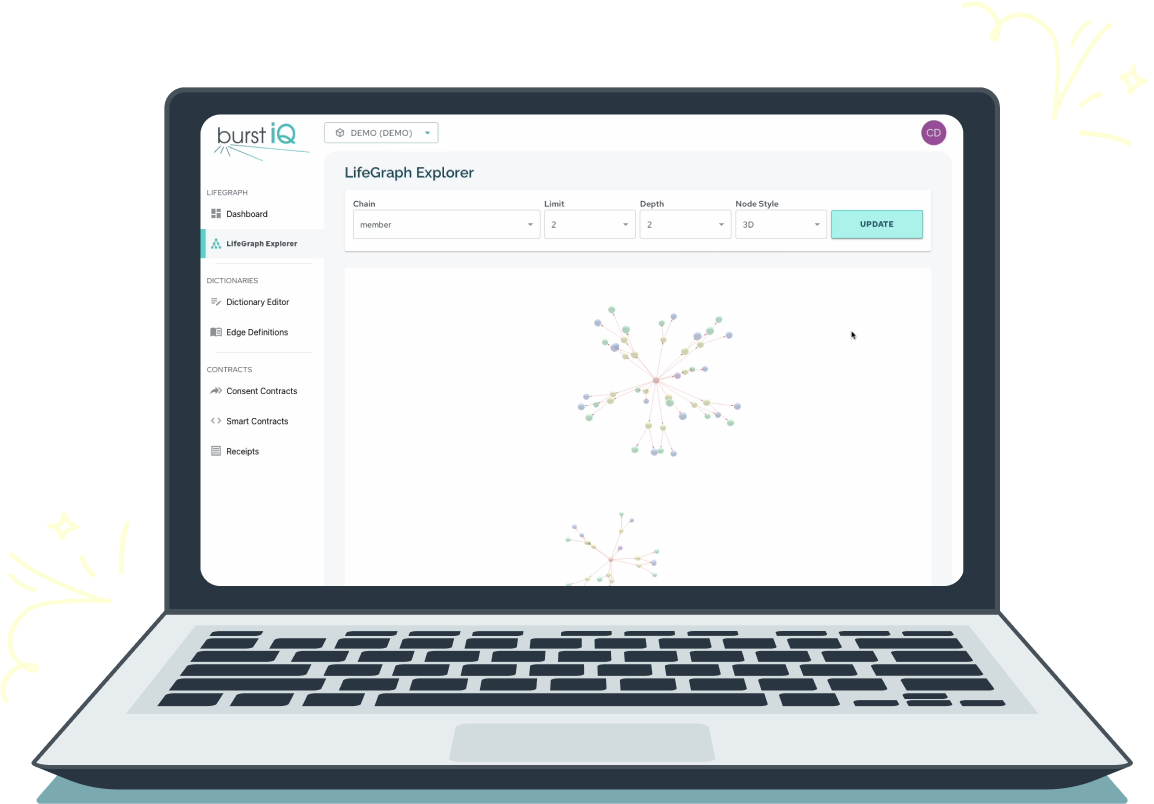

Building privacy and security into the very fabric of your data strategies can protect your organization from vulnerabilities that can derail your AI efforts. LifeGraph® from BurstIQ is an advanced data management platform that turns all your data into smart data to give you a solid foundation built on AI-ready data. What is smart data? Smart data holds all the context about each individual data asset along with cryptographic privacy and security, giving you complete trust in the data you use to train your AI models and, therefore, trustworthy AI outputs.

Employing blockchain and Web3 services, the LifeGraph platform provides a means to share data in a controlled manner using smart and consent contracts. The platform orchestrates data sharing in compliance with your organization’s governance standards, protecting sensitive data from being shared to train AI models inadvertently.

Additionally, the LifeGraph platform connects data through knowledge graphs. Knowledge graphs provide LLMs with structured, explicit representations of factual knowledge about concepts, entities, and their relationships. This structured knowledge enhances LLMs’ reasoning capabilities, allowing them to generate more accurate and reliable outputs. Essentially, knowledge graphs act as a factual backbone for LLMs, reducing the risk of hallucinations and ensuring the generated content is grounded in verified information.

Conclusion:

Balancing Innovation and Security

As AI advances, it’s crucial to remain vigilant about potential vulnerabilities. By understanding these risks and implementing robust security measures, we can work towards creating AI systems that are not only powerful but also secure, reliable, and trustworthy. The future of AI is undoubtedly promising, but it requires a proactive approach to security. As we push the boundaries of what’s possible with artificial intelligence, let’s ensure we’re building a technological future prioritizing innovation and safety.

About BurstIQ:

LifeGraph® by BurstIQ revolutionizes organizational data with advanced data management, privacy-enhancing technology, and knowledge graphs. The platform transforms data into a powerful asset, eliminating silos and providing a single, secure source of truth. LifeGraph reveals hidden connections within complex data sets, enabling easier analysis, insightful collaboration, and innovative decision-making. Organizations leverage LifeGraph to modernize legacy data repositories, driving value and fostering a culture of innovation for the future.

For more information about how LifeGraph can help you make data your superpower, please connect with us here.